60% of high-ticket funnels lose more revenue before checkout than during it. That hidden bleed kills ROI, inflates CAC, and makes ad spend futile at scale.

We name the problem with precision: funnels rarely fail at one point. They leak in multiple parts under unseen conditions. Small losses compound until lifetime value collapses.

Our promise is simple and pragmatic. We map a six-stage diagnostics loop—Verify, Collect, Evaluate, Test, Rectify, Check—and apply it with crisp data and real-world tests.

We translate proven diagnostics across disciplines into growth work. For example, a 42% add-to-cart symptom becomes a testable hypothesis. Then we isolate causes, find the root, and fix the system instead of patching pages.

For premium brands with complex stacks, attribution noise, and rising CAC, this is the way to restore profitable throughput. We build measurable, time-bound steps so outcomes are defensible, repeatable, and scalable.

Key Takeaways

- Most funnels leak across multiple parts, not just at the finish line.

- We use a six-stage diagnostics loop to isolate symptom vs. root.

- Concrete data and staged tests beat guesswork every time.

- System-level fixes prevent recurring revenue bleed.

- High-ticket brands need measurable, time-bound corrections to protect ROI.

The leak you can’t ignore: why high-ROI funnels fail today

High-ROI funnels fail quietly when external conditions shift faster than our measurement systems. We see growth metrics hold while cashflow tightens. That mismatch signals hidden problems, not luck.

Intake matters first. We capture precise symptoms with context—device, geo, traffic source, page speed, and offer state. This knowledge narrows likely causes before testing.

Scanners surface codes: GA4 alerts, event anomalies, CRM slippage. Those codes point the way but never replace hands-on inspection. We treat them as guides, not answers.

Most issues are environmental. Like plant care, funnels suffer from abiotic conditions—ad fatigue, UX drag, consent loss—before competitors become the cause. Time compounds risk: unresolved leaks distort attribution and erode trust.

- Capture — document symptom, time window, and segment.

- Triagemap — map symptom to likely failure modes.

- Act — run targeted tests and escalate where ROI is fastest.

| Intake Field | Why it matters | Typical signals | Fast action |

|---|---|---|---|

| Device & OS | Surface platform-specific friction | Drop by mobile browser, high bounce | Run device-specific session replays |

| Traffic Source | Reveal channel volatility | Conversion dip on one campaign window | Pause or re-route spend; test creatives |

| Timing & Windows | Expose time-based anomalies | Daypart or promo-period declines | Compare before/after deploys; isolate releases |

| Offer State | Clarifies message-market fit | Higher drop on specific SKUs or pages | Swap offers or adjust proof points |

We act like operators, not spectators. Document fast, test precisely, and escalate precision each pass. That is the way premium brands protect ROI under shifting conditions.

From symptom to root cause: translating diagnostics to funnel management

Noticing a symptom starts a disciplined hunt for the real cause. We label what we see, then strip layers until the root appears. Clear labels keep tests tight and outcomes defensible.

Symptom vs fault vs root cause

Symptom is the visible problem in the funnel — a checkout drop or bounce spike.

Fault is the immediate error that produces that symptom — a payment token failure or a layout shift.

Root Cause is the upstream generator — SDK conflict, recent deploy, or consent change.

The six-stage diagnostic loop for growth systems

- Verify — confirm metrics and scope.

- Collect — gather qualitative and quantitative information.

- Evaluate — narrow likely causes with disciplined filters.

- Test — run logical, staged experiments.

- Rectify — apply targeted fixes.

- Check — validate full-system stability.

| Stage | Action | Outcome |

|---|---|---|

| Verify | Baseline metrics, segment windows | Confirm problem exists |

| Collect | Session replays, logs, user notes | Actionable information |

| Evaluate | Prioritize tests by probability | Focused test plan |

| Test | Staged changes, A/B, rollback plan | Isolate fault |

Example: a PDP bounce spike is the symptom; a CLS shift is the fault; a third-party script reorder is the root. We defer the script, stabilize styles, then check heatmaps and conversions.

In practice: every case documents who ran which steps and what information moved probabilities. That record converts one-off fixes into reliable management across systems, shortening time to win and reducing repeat problems.

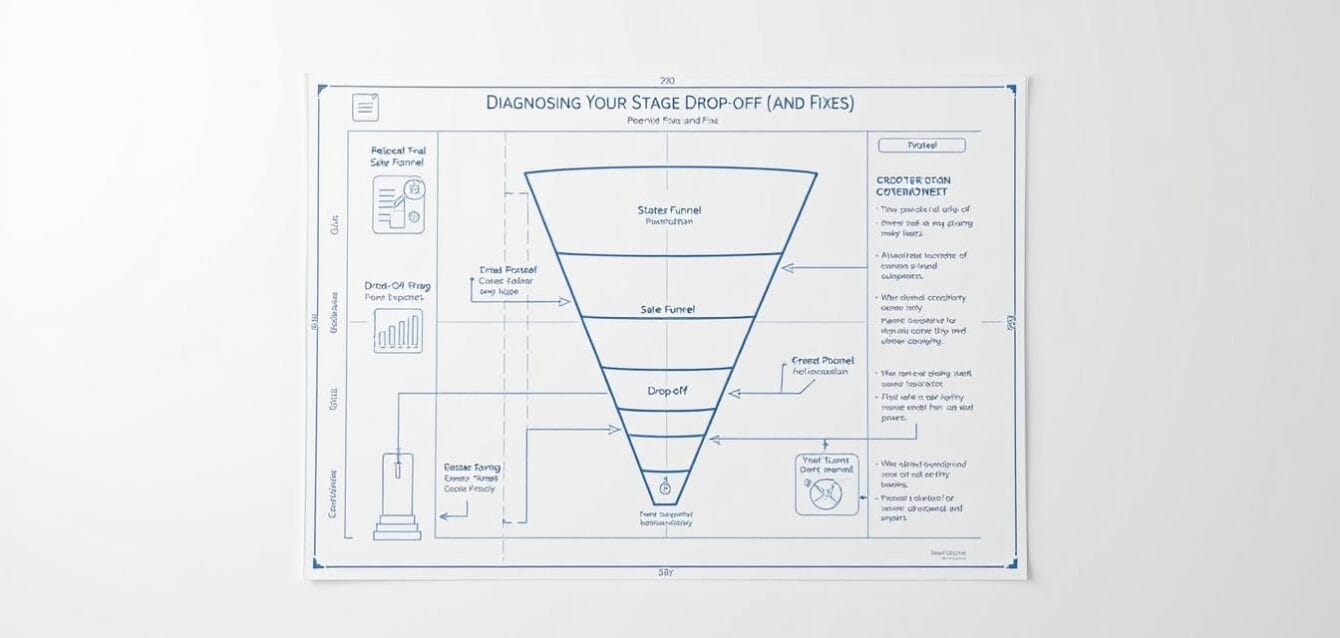

Diagnosing Your Stage Drop-Offs (and Fixes)

The first move is verification—remove doubt with precise period-over-period and segment data.

Verify

We confirm the problem with trustworthy data. Compare windows, devices, and campaigns to rule out seasonality or sampling noise.

Metric checklist: baseline conversion, segment delta, and timeframe alignment.

Collect

We gather context: GA4 events, CRM volumes, session replays, device mix, and content variants.

Collect both numbers and notes so tests are not blind and decisions stay fast.

Evaluate

We stop and think. Rank hypotheses by likely impact and cost to test.

This narrows probable causes and keeps diagnostic spend focused.

Test

Run logical, staged experiments that isolate one variable at a time—copy, forms, or load speed.

Each test must answer a single question and include a rollback plan.

Rectify

When evidence is decisive, ship targeted fixes: event schema corrections, layout refactors, or message tweaks.

Document the error fixed and the operational steps taken to reduce repeat incidents.

Check

Validate end-to-end stability across pages, devices, and channels. Confirm control metrics before closing the loop.

We log confirmed causes so the team gains repeatable control and faster time-to-confidence.

- Quick wins: prioritize fixes that restore revenue fastest.

- Controlled experiments: reduce risk and isolate learning.

- Operational discipline: track steps to prevent drift.

| Stage | Immediate Action | ROI Focus |

|---|---|---|

| Verify | Baseline comparison, segment checks | Avoid wasted tests; stop false positives |

| Test | One-variable experiments, rollback ready | Fast confidence; measurable lift |

| Check | End-to-end validation, cross-channel audit | Prevent regressions; secure gains |

In short: follow these six steps as a practical way to turn a scattered problem into a controlled, repeatable improvement path.

Your “OBD-II” for funnels: the analytics tool stack and what each detects

Think of the analytics stack as a vehicle fault reader—each layer reports codes that need translation. We map which tools surface signals and which require deeper inspection.

Platform scanners: GA4, CDPs, and event integrity checks

Platform scanners surface visible codes: event drops, attribution gaps, consent loss, and sudden metric shifts. They tell us which symptoms exist and where to begin.

Manufacturer-specific tools: CRM and MAP diagnostics for deeper data

CRMs and MAPs act like dealership interfaces. They run bidirectional queries, field-level audits, and stage progression checks to reveal hidden system mismatches that create pipeline stalls.

Live data views: real-time dashboards for transient issues

Real-time dashboards catch bursty latency, third-party outages, and transient consent impacts before daily reports digest them.

“Platform codes point the way; manufacturer tests confirm the cause.”

- Example: GA4 flags add_payment_info decline; CRM shows MQL-to-SQL lag; MAP logs payload failures — together they triangulate the first test: event integrity at checkout.

- We record a live sample of events and simulate buyer paths under production load to verify hypotheses.

- Outcome: faster isolation, fewer false alarms, cleaner executive data and repeatable steps to restore ROI.

Manual inspections that scanners miss: UX, content, and compliance checks

Elite teams inspect journeys by hand to catch subtle quality failures in context. Automated tooling finds patterns; human review interprets perception, tone, and intent.

Friction hunt: forms, load speed, and layout issues

We run a premium friction hunt because scanners miss human perception issues. Broken field validation, confusing form copy, intrusive modals, and layout shifts erode perceived quality.

Under live conditions—network throttling, mobile viewports, cookie consent banners—we manipulate parts of the path to surface intermittent problems. Test drives validate issues that only appear when the journey is physically used.

Message-market fit: offer clarity, risk reversal, and proof

We examine offer clarity, guarantees, and proof density to address hesitation at its cause. Case studies, testimonials, and clear risk reversal reduce friction and lift trust for premium service experiences.

“Visual inspection reveals the worn parts that analytics never show.”

| Inspection Area | What We Check | Why it Matters |

|---|---|---|

| Forms | Field masks, error tone, submit flow | Reduces friction; prevents false errors |

| Layout | Sticky elements, z-index, CLS | Protects CTA visibility and perceived quality |

| Compliance | Consent copy, ADA checks, disclosures | Builds trust for high-ticket purchases |

Example: a sticky chat widget overlaps the CTA on mobile; sample users hesitate and abandonment rises. Fix spacing and z-index, and conversions recover without extra spend.

We document information with screenshots, timings, and expected vs actual behavior so engineering and content teams can work efficiently. This is hands-on work that closes leaks scanners can’t see and preserves brand equity at scale.

Test drives under real conditions: replicating drop-offs by context

We simulate real buyer journeys to force intermittent problems into view.

We run scenario tests like true test drives. Devices, geos, traffic sources, and intent temperature vary so symptoms show where they actually matter.

Sample paths include homepage, advertorials, and direct-to-PDP. We sample multiple entry points to isolate friction and prioritize the highest-volume path first.

Instrumentation during motion

Live instrumentation captures what snapshots miss. Session replays, console logs, network traces, and real-time event streams record behavior under load.

We standardize tools—device farms, network throttlers, and log aggregators—so each test is repeatable and conclusive within a defined time budget.

“Observation under load reveals the in-motion faults that static diagnostics never surface.”

- Case: paid social warm traffic converts until geo shifts; copy idioms and proof need recalibration.

- Diagnostics in motion reveal lag spikes, consent barges, or misfiring tags during promo bursts.

- Each scenario ends with one decision: fix, monitor, or escalate.

| Focus | Tool | Outcome |

|---|---|---|

| Device variance | Device farm | Reproduce mobile-only failures |

| Network load | Throttler & logs | Surface latency under promo traffic |

| Event integrity | Real-time stream | Validate payloads and tag fire |

Root Cause Analysis for funnels: play the best odds, not guesses

Root cause work turns noise into a prioritized plan that leaders can act on. We codify RCA so decisions stay fast, auditable, and repeatable.

Define, gather evidence, identify, correct, and ensure

First, define the precise problem and the point of failure. Then assemble evidence from analytics, session replays, and field observation so the diagnosis is factual.

Next, isolate likely causes using probability-led branching. We distinguish visible symptoms from true root causes and validate with controlled tests.

Avoiding false fixes: trace upstream

Correcting an error at the surface rarely suffices. We trace the system upstream—scripts, consent states, and integrations—so a patched form does not hide a deeper cause.

“Play the best odds: shorter loops, clearer evidence, and governance that prevents regression.”

All corrections follow change management: versioned deploys, rollback plans, and monitoring windows. We then revisit the diagnosis to confirm effectiveness and capture knowledge in decision trees and playbooks.

- Define — scope, metric, and window.

- Gather — logs, replays, and stakeholder notes.

- Isolate — validate the probable cause before shipping.

- Control — deploy with rollback and monitor.

- Codify — store the learning for faster future response.

Distribution patterns: where, when, and who your drop-offs affect

Pattern recognition converts scattered losses into a clear plan of action. We map how declines appear across channels and pages to choose the right test and the right order of work.

Landscape distribution: uniform, random, and hot spots

Uniform declines suggest broad conditions — site-wide latency, a tracking outage, or consent shifts. Treat these first; they hurt systems fastest.

Random drops look like living variability. Creative fatigue, audience churn, or segmentation noise often cause this pattern.

Hot spots point to localized problems: a campaign, SKU, or landing page that needs immediate attention.

On-page distribution: which parts and which segments

Map affected parts by step: top-of-funnel, product pages, or checkout. Then split by segment — new vs returning, device, and geo.

Use a representative sample of sessions per segment to confirm whether symptoms spread over time or appear in a single window.

Abiotic vs biotic analogs: systemic conditions vs campaign-specific issues

Plant diagnostics help: uniform, system-wide declines act like drought; clustered, time-varying problems act like pests. That lens ties patterns to likely causes.

“Fast pattern recognition directs scarce effort to the fixes that return revenue first.”

- Quick triage: system issues get immediate fixes; localized causes are sequenced by impact.

- Temperature matters: cold traffic needs more proof; a temperature-linked pattern signals messaging mismatch.

- Linear clusters: follow URL paths and UTM rules — deployments or platform rules often explain linearity.

| Pattern | Signal | Action |

|---|---|---|

| Uniform | Site-wide drop across channels | Check infra, tracking, consent |

| Random | Variable drops by campaign/day | Audit creatives, audience, cadence |

| Hot spot | High loss on specific page or UTM | Inspect page, offer, recent deploy |

In practice: read patterns first, design a tight sample test, then act on the most likely cause. This keeps fixes strategic, measurable, and ROI-focused.

Timing analysis: seasonality, change logs, and the before/after of fixes

Timing is diagnostic glue: when an issue first appears often tells us which systems to inspect first.

We correlate first-seen symptoms with deployments, promo windows, and market events so the diagnosis anchors in time rather than guesswork.

Year-over-year and in-year comparisons adjust for seasonality, promo cycles, and macro swings. That context prevents mistaking expected variation for a real problem.

Maintain a rigorous change log. Tag releases, consent edits, pricing tweaks, and creative swaps so every case can be evaluated against known interventions.

Temperature and event timing matter: traffic surges or platform outages create transient spikes that differ from structural failures. We sample the same segments and windows for before/after analysis so results are defensible years later.

“Centralized information and consistent sampling compress time-to-impact and raise the odds of a correct diagnosis on the first pass.”

- Classify simultaneous surface failures as systemic and accelerate rollback or hotfix.

- Flag gradual spread as likely campaign or audience drift and sequence work by ROI.

- Give executives a cadence: weekly timing reviews, monthly year comparisons, and release-aligned postmortems.

| Focus | Action | Outcome |

|---|---|---|

| First-seen event | Match to deploy & market calendar | Anchor cause hypothesis |

| Before/After | Same segment, same window | Defensible impact measurement |

| Sampling cadence | Centralized logs at fixed intervals | Stable diagnosis over years |

From diagnosis to durable corrections: prioritized fixes and quality control

A clear governance layer turns one-off findings into durable operational improvements.

https://www.youtube.com/watch?v=M6EQUP4iTHM

We convert diagnosis into action using decision trees that map each symptom pattern to likely causes and the minimal corrective steps.

Fixes get scored by impact, effort, and risk to service continuity so premium experiences stay intact during work windows.

Decision trees: mapping symptoms to causes and corrective actions

Decision trees steer teams to the highest-probability causes. Each branch specifies who acts, what scripts or deployments run, and which acceptance criteria must be met.

The three Cs—concern, cause, correction—standardize clear handoffs across management and service teams.

Rectify and recheck: confirm no new errors, regressions, or leaks

We implement corrections behind QA gates with device tests across critical parts of the journey. Acceptance ties to target metrics and the prior year benchmark so results are measurable.

Post-rectify, we recheck systems under live load and run regression sweeps on high-value segments to ensure no fresh symptoms appear.

“We formalize the work so fixes become improvements that last.”

- Prioritize by ROI, effort, and risk to service continuity.

- Ship with rollback plans, test matrices, and clear acceptance criteria.

- Document resolved symptoms, outcomes, and update decision trees for next year.

| Gate | Test | Outcome |

|---|---|---|

| Pre-deploy QA | Device matrix, smoke tests | No regressions in critical flows |

| Canary | Small segment live run | Metric delta within threshold |

| Post-release check | Regression sweep, logs | Closed issues, updated systems |

Macro Webber operates this as a service model that guarantees disciplined management, preserves quality, and accelerates throughput. The result is durable performance gains that scale, not temporary blips on a dashboard.

Conclusion

,

Make decisions that pull revenue back into the funnel and hold it there.

In short: most revenue loss stems from a handful of critical problems across key parts of the journey. With the right tools, focused tests, and a disciplined loop, we identify true causes and resolve them at the root.

We verify, collect evidence, evaluate hypotheses, then run single-variable tests before we act. Manual inspection plus scanners stops hidden symptoms from hiding in plain sight. That approach preserves service quality for years.

Act now: Macro Webber’s Growth Blueprint is limited to white-glove clients. Book a consult this week to secure priority access and measurable, defensible ROI.